To achieve algorithmic fairness we need to bridge AI technology with society and legal systems

Researchers are calling for a more ambitious study of algorithmic fairness that bridges AI technology with social and legal components. Our research provides insights how to achieve that.

It’s fantastic that Arvind Narayanan in the attached recent post is advocating for "a more ambitious study of fairness and justice in algorithmic decision making in which we attempt to model the sociotechnical system, not just the technical subsystem".

Among others, Narayanan co-authored a pioneering textbook 📕 on “Fairness and Machine Learning”, which discusses related matters. The book includes chapters on causality and explainability 📊, crucial for understanding and modeling of sociotechnical systems.

The book 📕 includes also a section on anti-discrimination law ⚖️. I consider law as a part of our sociotechnical systems. Thus, it’s equally important to bridge legal instruments and concepts with technical methods and concepts.

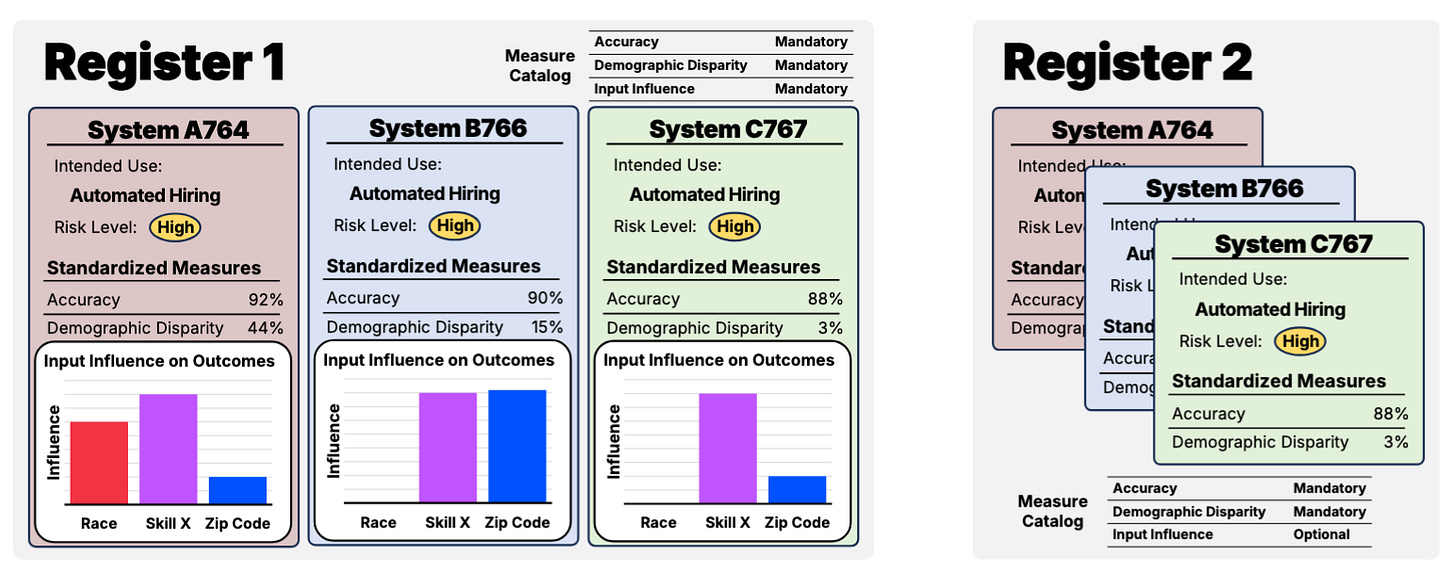

I always felt that these important parts of the book 📕 could be better integrated with its more technical contributions. We address these matters in our own research, by marrying fairness with explainability 📊 and legal instruments ⚖️ [1] and by discussing the issues with disparate-impact liability in the context of AI systems [2] long before Donald Trump released respective executive order ⚖️. These studies made us realize that algorithmic fairness requires one more thing: transparency 🫧; so recently we’ve written a white paper (with @Warren, …, @Perello, @Zick) introducing a “Global Framework for Exchanging Information on AI Systems” (see below illustration) [3]. Such a framework would help to bridge the technical, social, and legal components of algorithmic fairness. We write that it “would enable and encourage traceability and accountability of AI technology from ultimate source, through enterprise supply chains, right through to consumers and the impacted public” [3].

I’m happy that excellent researchers, such as Narayanan, are now calling for a more ambitious study of algorithmic fairness that bridges technical, social, and legal components. How to do that? That’s what our research focuses on and what I teach in my “Responsible AI” course (developed at UMass Amherst, now offered at University College Dublin). 🧑🏫

References:

[1] Grabowicz, P. A., Perello, N., & Mishra, A. (2022). Marrying Fairness and Explainability in Supervised Learning. 2022 ACM Conference on Fairness, Accountability, and Transparency, 1905–1916. https://doi.org/10.1145/3531146.3533236

[2] Perello, N., & Grabowicz, P. (2024). Fair Machine Learning Post Affirmative Action. SIGCAS Comput. Soc., 52(2), 22. https://doi.org/10.1145/3656021.3656029

[3] Buckley, W., Byrne, A., Perello, N., Cousins, C., Yasseri, T., Zick, Y., & Grabowicz, P. (2026). Towards AI Transparency and Accountability: A Global Framework for Exchanging Information on AI Systems. https://doi.org/10.48550/arXiv.2307.13658